Hacking AI Safety with Human Quirks

2024 is shaping up to be a year for the history books. The Civic Hacker team has partnered with the National Institute of Standards and Technology. We joined a consortium of organizations working together to ensure that the AI you interact with online is safe and trustworthy. We aim to make notable contributions to the NIST relevant to AI safety using our anti-oppressive theory of change, prototypes, data, and codebases.

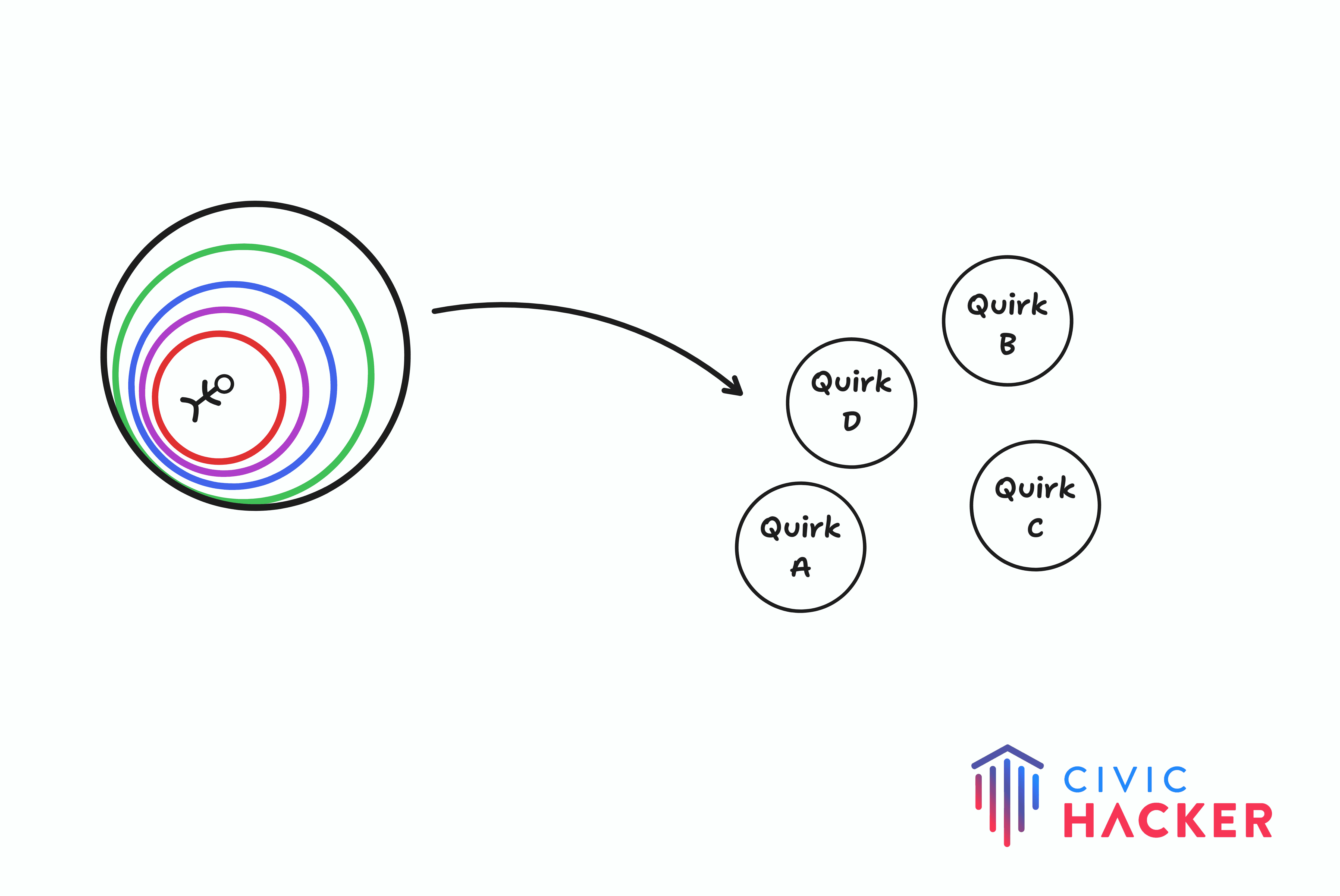

We believe meaningful contributions to AI Safety require inquiry into artificial intelligence's impact on distinctive parts of the human experience we call 'Quirks.' Quirks are not inherently positive or negative; they are characteristics of the Human experience that anyone can quickly identify, like work-life balance, gender-based violence, copyright protection, privacy, algorithmic injustice, elections, and misinformation, to name a few. We readily identified Quirks using Bronfenbrenner's Ecological Systems Theory1. Each Quirk links to what individuals experience directly and indirectly through their environment (school, neighborhood, political climate, etc).

(Bronfenbrenner’s Ecological Systems decomposes into Quirks)

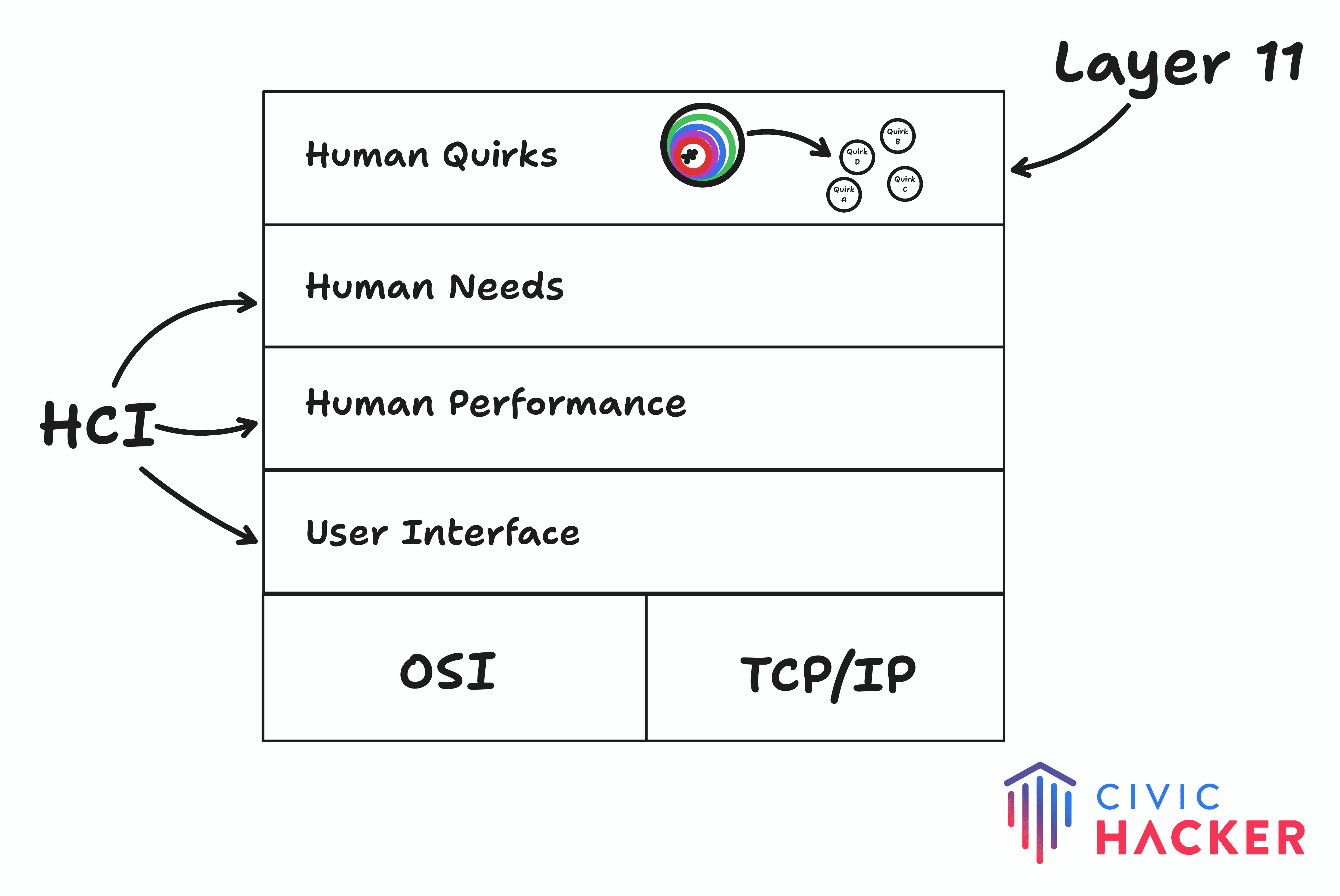

The technologies used to build AI systems are widely available, relatively cheap, and built employing the open-system intercommunication model2. We will interact with AI across multiple modalities, not just the visual information from screens. Our interaction with AI will continue to mix uses of audio, tactile, and gesture-based user interfaces. Like all digital technology, AI can detract from or contribute to Human Performance (memory, motor control, cognition) while impeding or satisfying Human Needs. Human Quirks reveals an eleventh layer to consider when building atop Human Needs like security, education, entertainment, and communication3.

(prototype revealing new eleventh layer to system design)

Like our other projects, our formula involves specific pieces of software, policy, and data we can all create that help our Quirks remain resilient to the influence of AI. We've already found several code bases that prove helpful to our approach. For example, we found Nightshade software (from the University of Chicago) that can protect visual artists from companies using their art to train AI models by breaking the model. You can learn more about the prototype and review other resources at humanquirks.com and by selecting the 'resources' menu.

Step One: split into groups

The consortium members will split into five Working Groups, each with a different focus area and deliverables. You can find the full descriptions on the NIST website, but I've summarized the five Working Groups below:

Risk Management for Generative AI. Create a companion manual and other resources on applying the AI Risk Management Framework to generative AI use cases.

Synthetic Content. Identify the existing standards, tools, methods, and practices related to labeling, detecting, auditing, and maintaining synthetic content, as well as creating countermeasures that prevent generative AI from producing child sexual abuse material or non-consensual intimate imagery of real individuals.

Capability Evaluations. Create guidance, benchmarks, and test environments for evaluating and auditing AI capabilities, focusing on capabilities through which AI could cause harm.

Red-Teaming. Establish guidelines to enable developers of AI, especially of dual-use foundation models, to conduct AI red-teaming tests.

Safety & Security. Coordinate and develop guidelines for managing the safety and security of dual-use foundation models. Coordinate and establish procedures for managing the safety and security of dual-use foundation models.

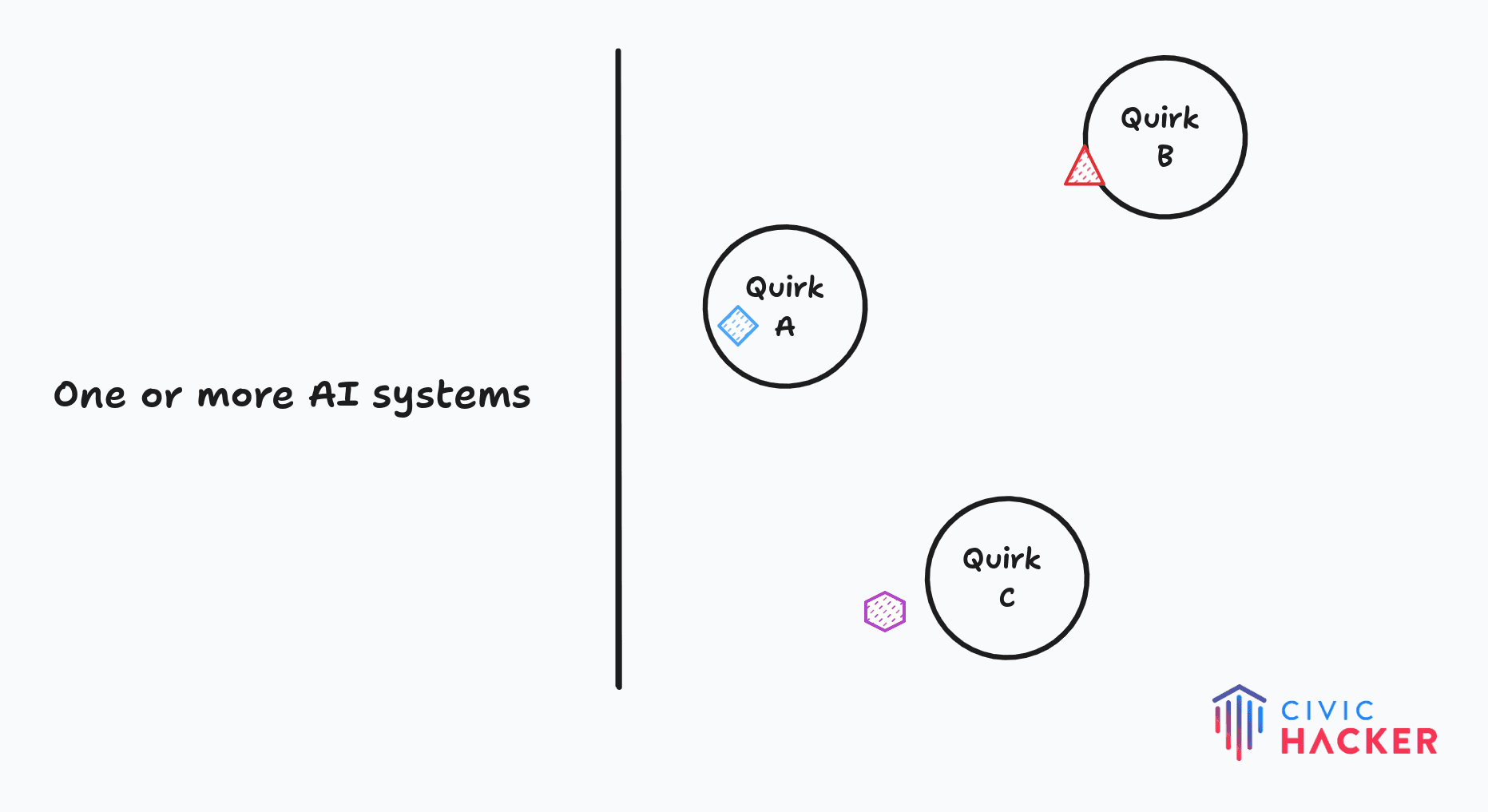

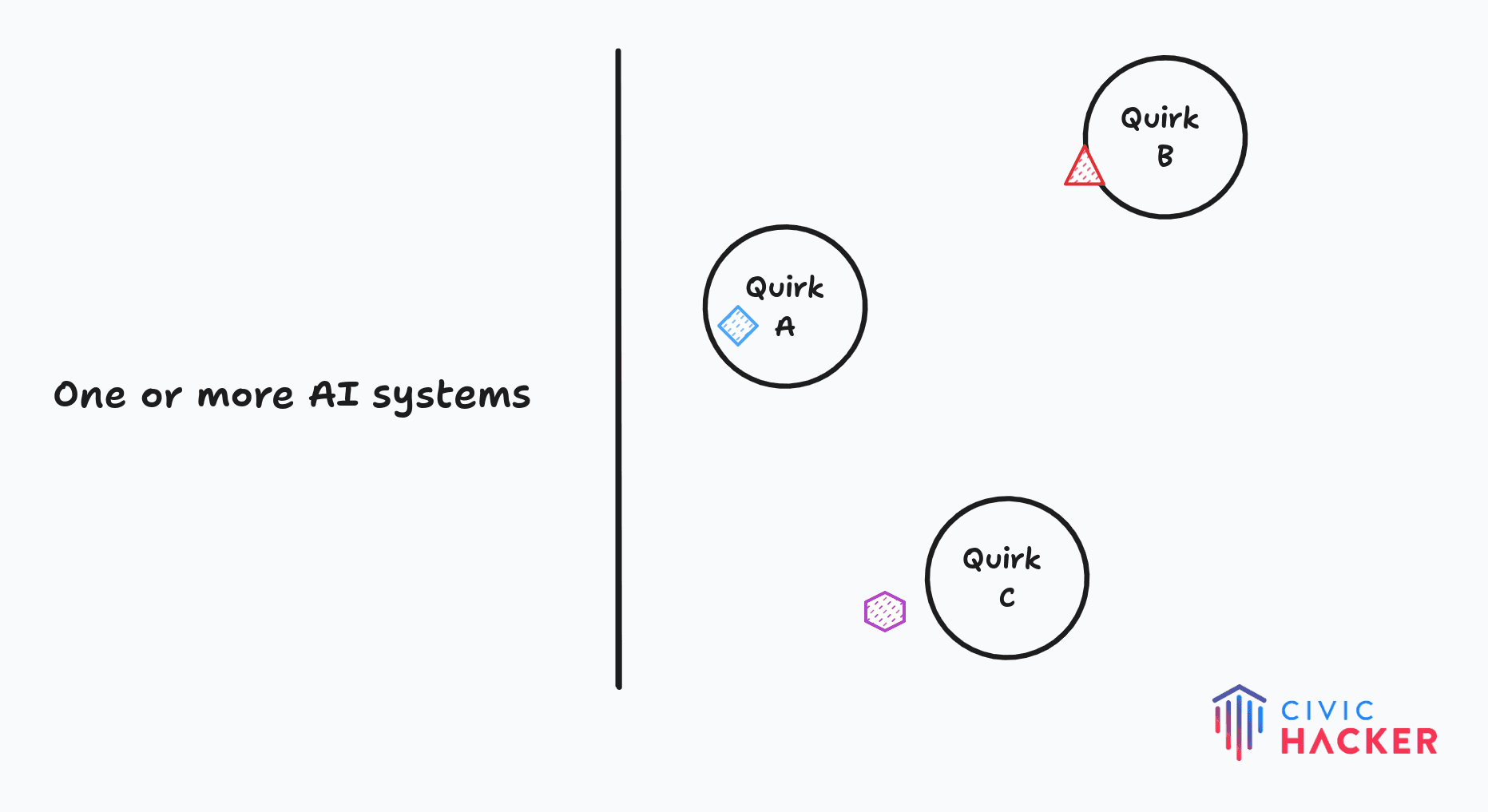

(prototype displaying Human Quirks confronting AI systems)

We immediately see there a number of techniques we can employ to provide to our Quirks.

The Work Begins Now

We've pinpointed where we can make decisive contributions while onboarding. I expect us to have notable findings to share with both consortium members and Civic Hacker supporters shortly. In the meantime, here are some guiding questions we're mulling over internally. We don't have all the answers (yet):

- Which of our collective social traits or Quirks are sensitive to the advances of AI?

- Is there a way to steer how our Quirks and Web-based technologies interact to improve the lives of others?

- How do we fortify against the negative impact of AI while still encouraging innovation?

Like always, we encourage you to support Civic Hacker by funding our research or through using our services.

Footnotes

-

Bronfenbrenner, U. (1969). Motivational and social components in compensatory education programs: Suggested principles, practices, and research designs. In E. H. Grotberg (Ed.), Critical issues in research related to disadvantaged children (pp. 1–32). Princeton, NJ: Educational Testing Service. ↩

-

Open Systems Intercommunication model aka OSI model describes the components to build Internet connected systems. ↩

-

Human-Computer Interaction model applies to Human factor atop of the OSI model. It does not mention other models: https://andrewpatrick.ca/OSI/10layer.html ↩