Data Equity means protecting people and their data

Massive databases of personal data put vulnerable groups in danger of unfair targeting by law enforcement, exploitation, and identity thief. Machine learning augments these dangers even when the intentions are to protect and serve.

In a previous blog post, we defined Inequity as

an instance of unfairness or injustice.

Noble efforts such as Project Recon and Data for Black Lives seek opportunities to use data to benefit oppressed communities. Their projects attempt to relieve the pains created by profit-driven, heinous, and unethical data collection and use. These data-driven crusaders must exist and stay true to their missions.

Alas, equity as a concept proves elusive to even the most well-intentioned organizations. When used as a tool by oppressors, language strips oppressed groups of the ability to combat exploitation. A clear and actionable definition of Data Equity is our weapon against oppression.

Data Equity is the assurance of soundness and fairness in any decisions, predictions, and value realized from data.

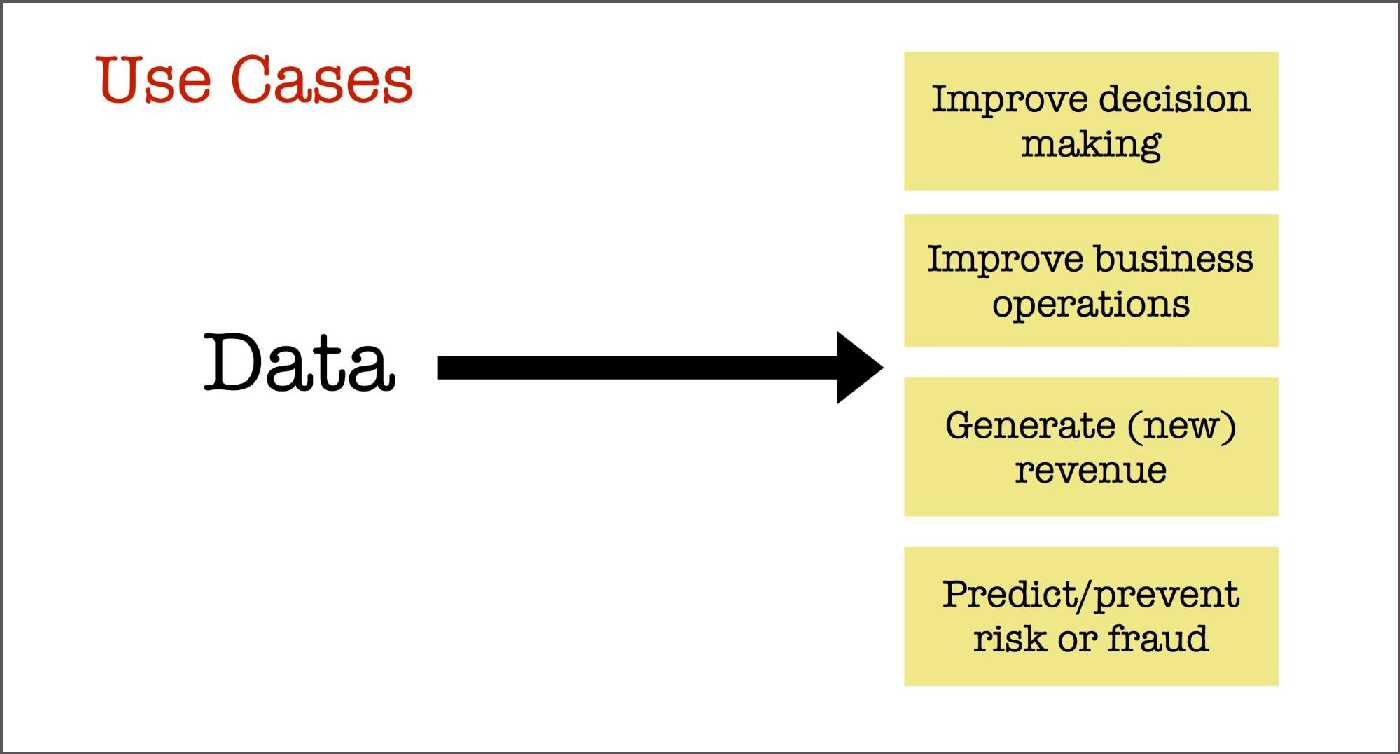

In law enforcement, legislation, science, business, we use data to aid human decision-making. We use machines to boost human productivity in managing, collecting, analyzing, and performing repetitive tasks.

(Courtesy of O'Reilly)

From Civic Hacker's point-of-view, data equity has three components:

- Data & Information Security

- Protect data contributors (People)

- Defend machine learning systems from cyber attacks

Let's take a look at each component.

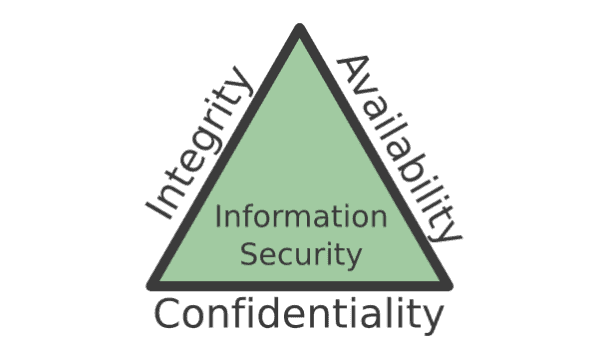

Data & Information Security

(Courtesy of U.S. Central Intelligence Agency)

Data and information security boil down to treating data with care. Anything that compromises data integrity, availability, or confidentiality is what Civic Hacker calls a breach. There's no shortage of policies and practices designed to inform and protect consumers of shady uses of their data online. Such regulations include the General Data Protection Regulation (GDPR) put forth by the European Union and the California Consumer Privacy Act (CCPA). These regulations outline practices that organizations should implement to ensure their users stay informed and protected. They do not preclude additional rules on handling health information, children's data, or financial transactions.

- If you have international users, become GDPR compliant

- If you're in the U.S., become CCPA compliant

- Reach out to us for help with implementing NIST Cybersecurity Framework

- Track any new regulations related to health information (New HIPAA Safe Harbor)

Protect Your Data Contributors

Long-story-short: Never choose data over people. Be transparent, and compensate where you can. Here are a couple examples of when data collection is used in tandem with oppression and exploitation.

Henrietta Lacks was a Black woman whose cells were harvested after her death. Her family was not notified or compensated, and they did not grant permission. The value realized from work using her cells resulted in significant advances in cancer research. Henrietta and her family's humanity was sacrificed for the good of society. How convenient.

Facebook, Inc. was penalized by the U.S. Federal Trade Commission for unethically collecting images from their user's devices to help develop their facial recognition technology. Facebook was fined 5 billion US dollars.

Ensure vulnerable groups can realize value of their data

Like Creative Commons Licenses, the Open Data Commons group provides licenses designed to protect the interests of those who wish to share and consume data sources that benefit the human condition.

Abuse is easily avoided when organizations have care enough about humans to develop at least one of the following:

- Develop a Data Contribution Agreement

- Be transparent on how you use automated tools.

- Ensure the results do not hurt a protected group

- Avoid Cross-Device tracking (financial, health, children info)

Defend Machine Learning Systems against cyber attacks

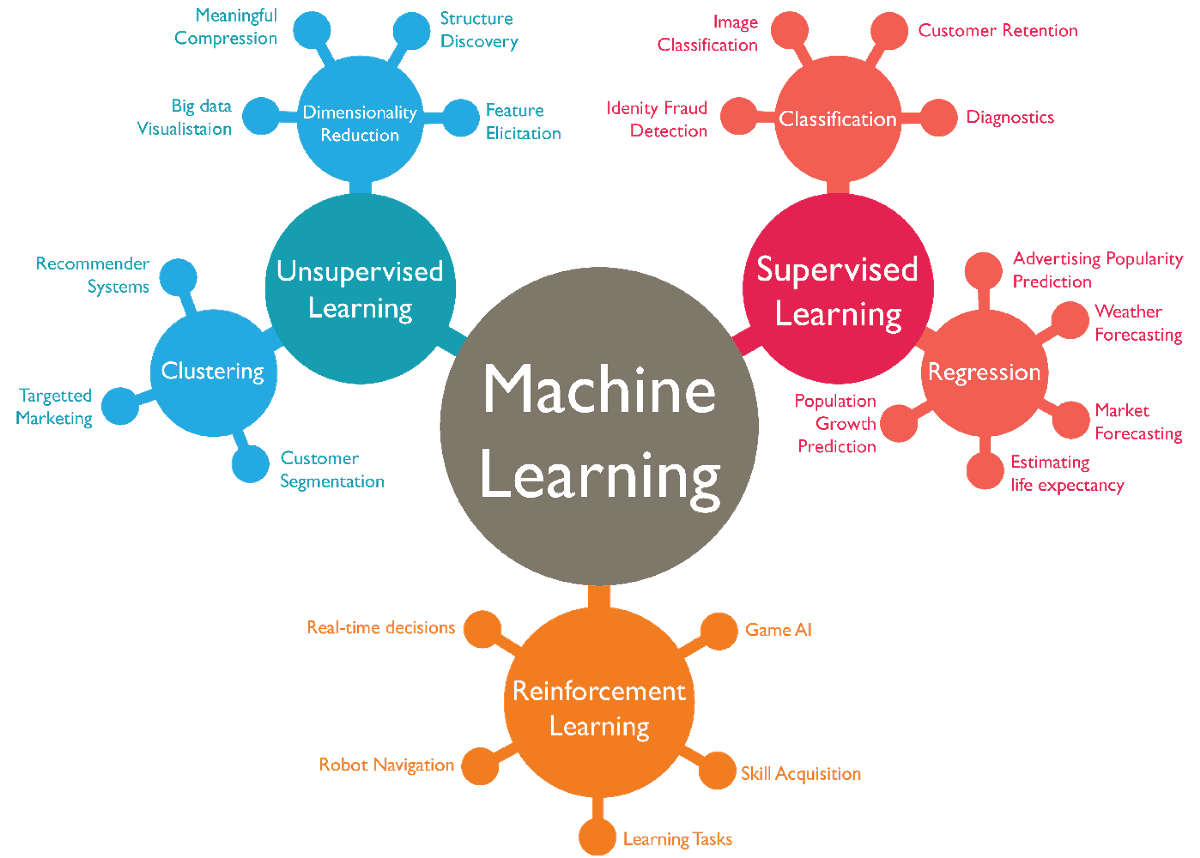

With Machine Learning, we depend on algorithms and math to aid in making decisions on behalf of humans. The hacking of Machine Learning systems is different from biases embedded in ML algorithms. However, one can augment the impact of the other.

Tay: Microsoft's Neo-Nazi Twitter bot

Tay was a Twitter chatbot for entertainment purposes dedicated to U.S. audiences. Within 24 hours, Tay was taken down because it posted racist and anti-semitic statements and images.

Trigger warning: Click here to see some examples, but don't say we didn't warn you.

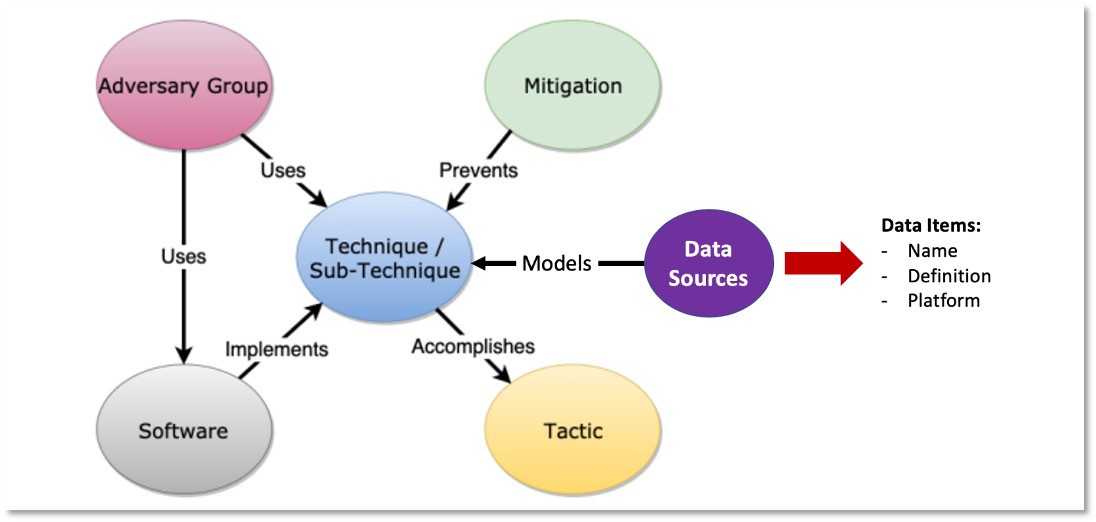

I plan to cover specific case studies on attacks on ML systems in detail in later blog posts. For now, you must know that attacks on machine learning systems are successful and frequent enough that it has an official name: Adversarial Machine Learning. Major companies such as Google, Amazon, Microsoft, and Tesla, have had their ML systems tricked, evaded, or misled.

Thankfully, recent victims of Adversarial Machine Learning attacks have started publishing their work to give the community a means to develop lasting defenses. Existing security response teams can expect guidance and resources on adapting their current Threat Modeling1 and Threat Hunting2 practices to address this new class of attacks.

(ATT&CK Framework; The Mitre Corporation)

The Recap

Data Equity is the assurance of soundness and fairness in any decisions, predictions, and value realized from data.

- Keep Black data safe (NIST Cyber Security Framework)

- Data Contribution Agreements (transparency, compensation, usage)

- Threat Hunting/Modeling to defend against attacks to ML systems

I hope this information proves useful. Keep this work alive by sponsoring.

Footnotes

-

Threat Hunting is proactively searching for malware or attackers that are hiding within a network. https://cybersecurity.att.com/blogs/security-essentials/threat-hunting-explained (Jan 02, 2021) ↩

-

Threat Modeling is the process of using hypothetical scenarios, system diagrams, and testing to help secure systems and data. https://www.cisco.com/c/en/us/products/security/what-is-threat-modeling.html (Jan 02, 2021) ↩